Emerging AI Privacy Threats: Challenges and Solutions

As artificial intelligence (AI) continues to evolve at a rapid pace, concerns about emerging AI privacy threats become increasingly prevalent. These threats pose significant risks to personal data, user privacy, and overall trust in AI systems.

One major concern is the potential for data breaches in AI systems. As AI models process vast amounts of sensitive information, vulnerabilities can be exploited, leading to unauthorized access and misuse of personal data. Ensuring robust data security measures is essential to mitigate these risks.

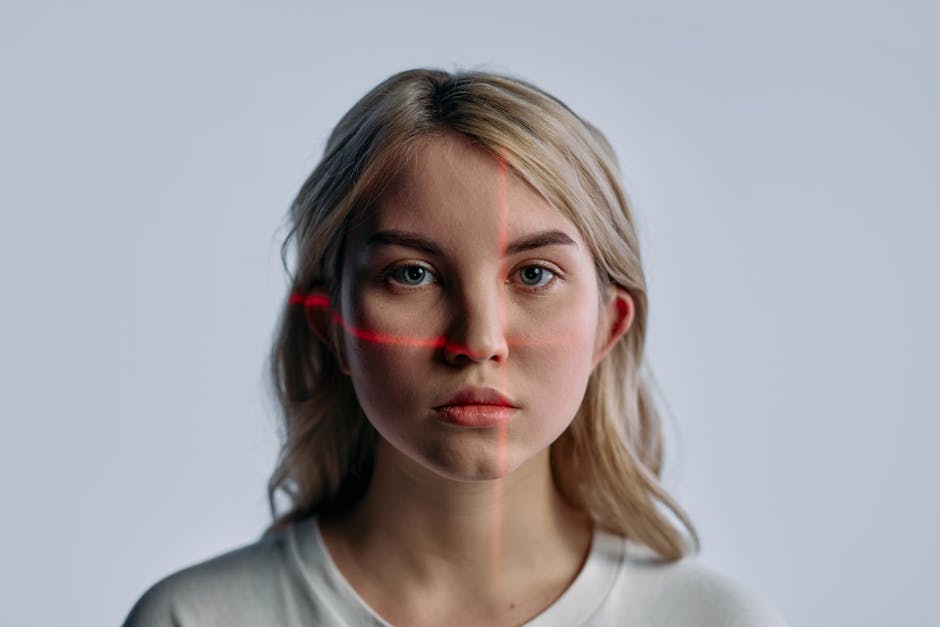

Another pressing issue is the privacy infringement through AI. Sophisticated algorithms can infer private details from publicly available data, raising ethical questions about surveillance and consent. Implementing privacy-preserving AI techniques, such as differential privacy, can help protect individuals' information while maintaining AI functionality.

Developers and policymakers must stay vigilant about these challenges by establishing regulations for AI privacy and promoting transparent AI development. Collaboration between stakeholders is vital to create guidelines that safeguard user rights without stifling innovation.

To stay ahead of these emerging threats, organizations should invest in AI privacy technology solutions that enhance data security, user anonymity, and ethical data use. By proactively addressing these privacy challenges, society can harness AI’s benefits while minimizing risks.